This page documents some of my work over the years, chronologically ordered (latest work towards the top).

Research Interest and Expertise

Human-Computer Interaction (HCI) through immersive display and interaction technologies, specifically Immersive Analytics (IA) with a focus on Virtual Reality (VR), 3D User Interfaces (3D UIs), Hybrid Asymmetric Collaboration, Spatio-Temporal Data Analysis, and Empirical Evaluations.

Open Source Statement [ github.com/nicoversity ]

I have developed various software modules to practically facilitate the implementation of different interfaces and systems as part of the research activities I am involved in. The modules and materials that I deemed useful and relevant are freely available on GitHub as open source for other researchers, practitioners, students, and so on, to use and adapt.

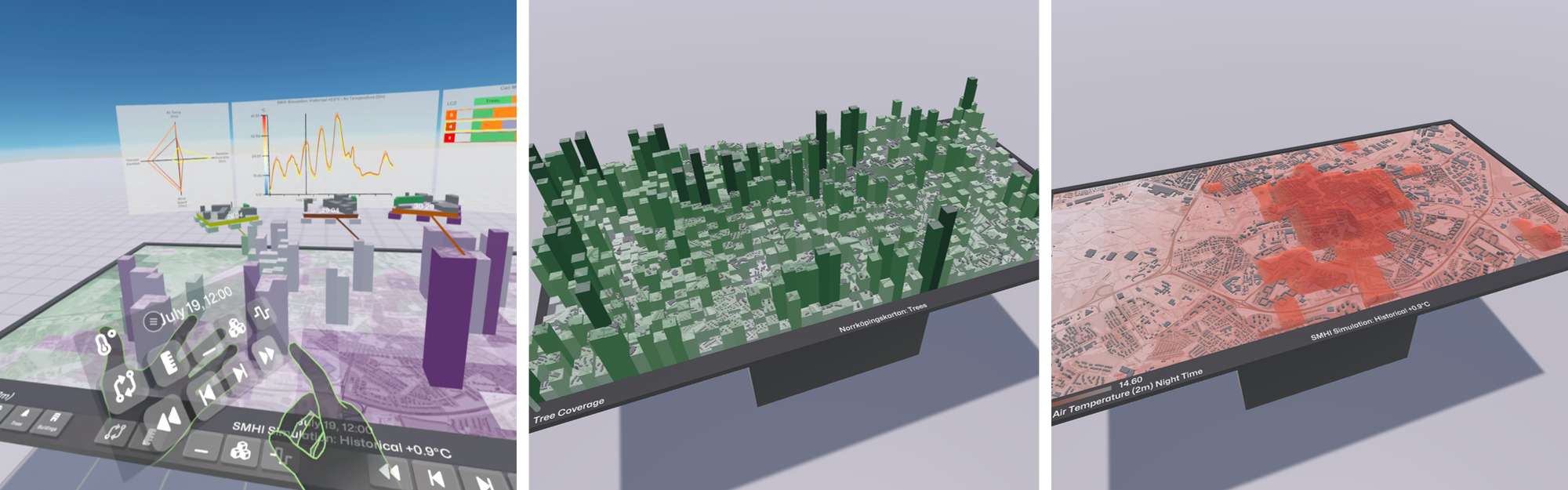

Immersive Analytics for Urban Heat

(2022 - present) As of late 2022 I am exploring how to visualize and interact with urban climate data (with a focus on urban heat and heat-related phenomena) using immersive display and interaction technologies within the [ Immersive Analytics for Urban Heat ] project and my postdoctoral research at Linköping University.

With Dr. Katerina Vrotsou, Prof. Dr. Andreas Kerren, Dr. Aris Alissandrakis, Carlo Navarra, Dr. Lotten Wiréhn, and Assoc. Prof. Dr. Tina-Simone Neset

and collaborations with the Swedish Meteorological and Hydrological Institute (SMHI) and Norrköping Municipality

Immersive Analytics and Hybrid Asymmetric Collaboration

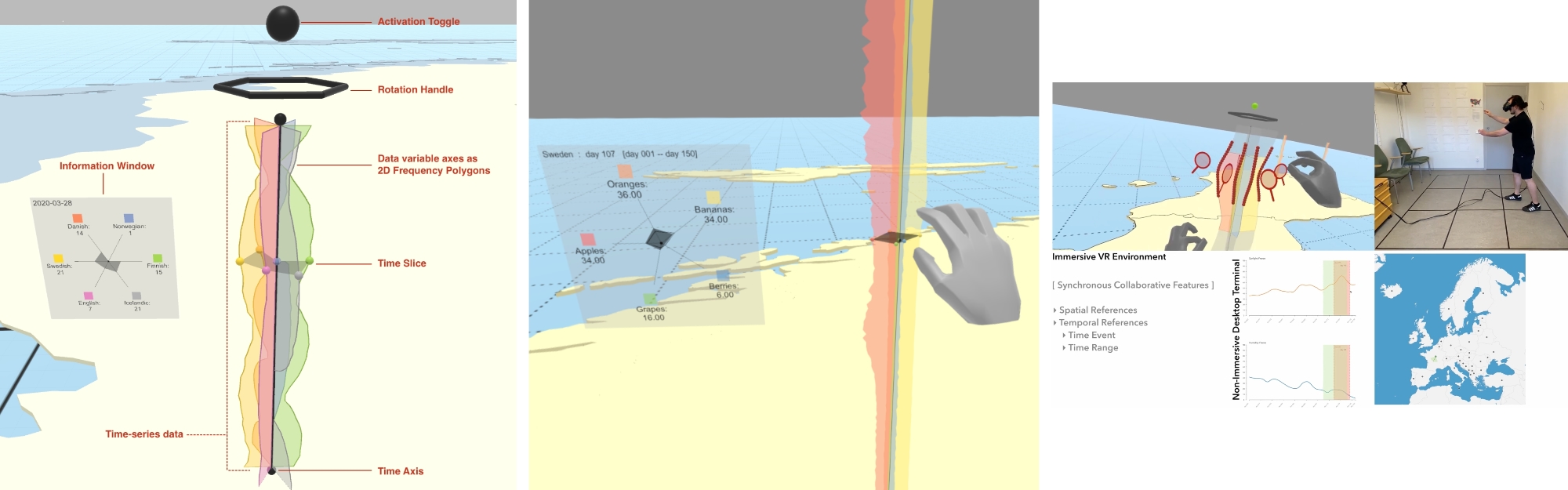

(2020 - 2022) Immersive Analytics is a highly interdisciplinary research area that synthesizes expertise from areas such as Human-Computer Interaction, Virtual Reality, 3D User Interfaces, Information Visualization, Visual Analytics, and Computer-Supported Cooperative Work, among others. The main focus of my research in this area is related to the investigation of (1) 3D UI design approaches in order to explore, interact, and navigate time-oriented data in immersive VR, and (2) approaches of hybrid asymmetric collaboration, i.e., collaboration between multiple users in a scenario where some use immersive (3D) technologies, while others use non-immersive (2D) technologies to explore the same dataset at the same time.

To further investigate this matter, I developed an immersive 3D time visualization that is derived from the concept of a 2D radar chart (also known as Kiviat figure or star plot). The additional third dimension is used to visualize the individual data variables of the chart over time. These 3D Radar Charts can be placed in the VR environment, for instance at geospatial relevant locations, and then be explored through natural user interaction using 3D gestural input. This allows for data exploration and interaction as well as consecutive meaning- and decision-making. I am empirically evaluating these types of applications through the conduction of user interaction studies in various setups. Important aspects of the developed VR applications throughout the years are concerned with their usability and user engagement, allowing even novices that have no or only few experiences with such applications and technologies to immersive themselves and complete meaningful tasks, observations, and interactions, without the additional need for extensive learning of the interface.

With Dr. Aris Alissandrakis, and Prof. Dr. Andreas Kerren

[ Ph.D. Thesis 2022 ]

[ VIRE 2023 ]

[ FrontiersInVR 2022 ]

[ NordiCHI 2020 ]

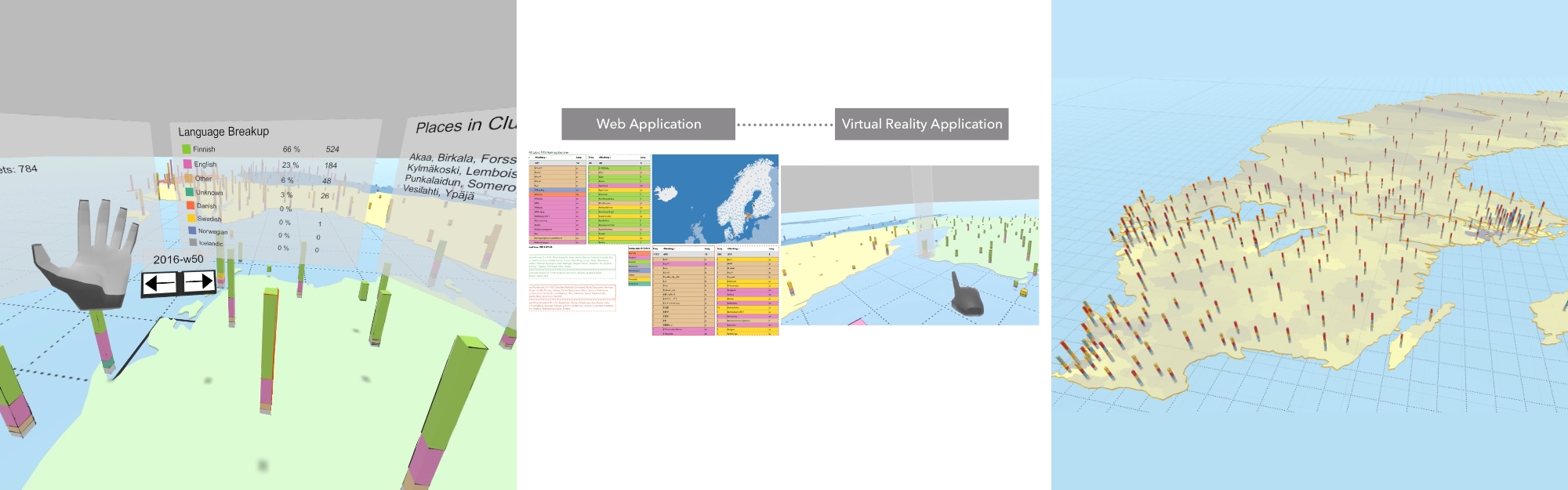

Open Data Exploration in Virtual Reality x Nordic Tweet Stream

(2018 - 2020) Extending and building upon the ODXVR work, we established and entered an interdisciplinary collaboration with colleagues at the Department of Languages and the Department of Computer Science at Linnaeus University. The ODXVR application was rigorously extended into a new iteration that displays Twitter data collected within the scope of the Nordic Tweet Stream initiative (NTS; a dynamic corpus of tweets, geo-location tagged with an origin in the Nordic countries). Exploring such data is of particular interest to socio-linguistic researchers, for instance to investigate language variability in social networks based on location and time. Within the context of my research, such use-cases allow the exploration of interaction and interface design approaches towards the natural use of immersive technologies for data exploration and meaning making. The presented work is a case study using data from Twitter. However, the VR application is data-agnostic and able to visualize, besides tweets, data from different contexts. For illustration, I created an additional use-case that allows to explore in VR the Swedish parliament election results in regard to municipality and time, based on open data provided by the Swedish authorities.

Additionally within the scope of the NTS, I implemented two variants of a non-immersive, interactive information visualization, and connected them to the immersive VR application via WebSocket interface to allow a synchronous collaborative exploration of the NTS data: one user inside VR, and one user outside. Such a setup can be described as Hybrid Collaborative Immersive Analytics.

With Dr. Aris Alissandrakis, Prof. Dr. Andreas Kerren, and Prof. Dr. Jukka Tyrkkö

[ NordiCHI 2020 ]

[ VARIENG 2019 ]

[ ADDA 2019 ]

[ ICAME 2018 ]

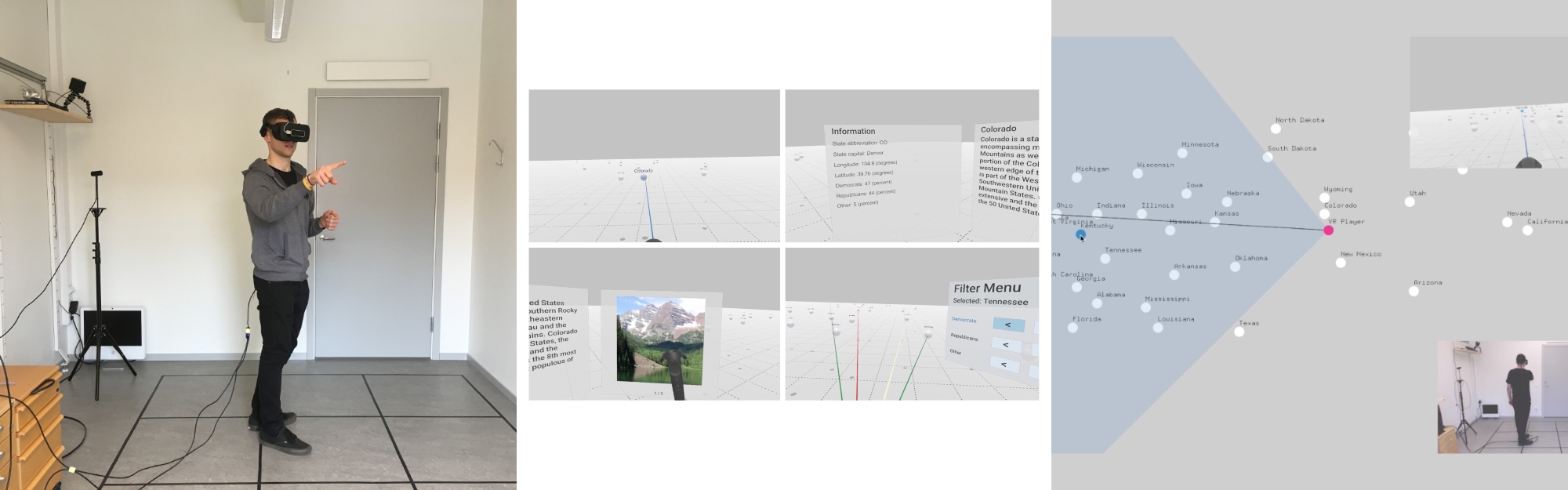

Open Data Exploration in Virtual Reality (ODXVR)

(2017 - 2018) Transitioning from my previous Virtual Reality (VR) related work and starting off the journey towards a doctoral degree, I continued investigating approaches on how to display and interact with open data in virtual reality. For this purpose and at this initial stage of my doctoral studies, I developed an application that is able to handle different kinds of (open) data, assuming the data is mapped to a specific data model that was defined for this purpose. For the first use-case, data about the 2016 US presidential election could be explored in the immersive VR environment, originating from Wikipedia (Wikimedia and DBpedia), Wolfram Alpha, and The New York Times. The VR application is developed using Unity.

Furthermore, I also developed a companion application based on openFrameworks that presents an overview of the data displayed in the VR environment from a bird's-eye view. I connected both applications using the Open Sound Control (OSC) protocol. The companion application updates live the position and field of view of the VR user. A user outside of the VR environment can select nodes that are then highlighted accordingly in the VR environment. This was an initial experimental effort with the objective to facilitate communication between the two users. The video example (embedded below) illustrates the VR application using a room-scale VR setup based on the HTC Vive. Two additional input prototypes have been implemented: Oculus Rift using a gamepad, and Oculus Rift with 3D gestural input using the Leap Motion. A Node.js server and MongoDB database provide data and visual structures to both the Unity3D and openFrameworks application.

With Dr. Aris Alissandrakis

[ VIRE 2020 ]

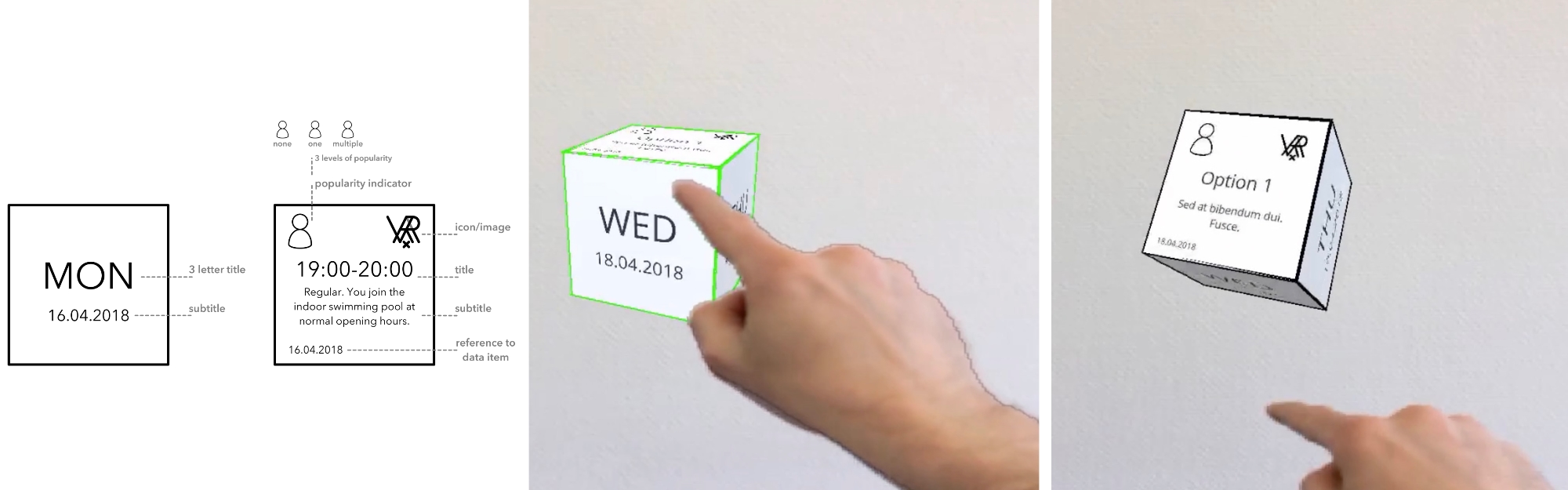

Augmented Reality for Public Engagement - Cube Interface

(2018) Within the context of the PEAR (Augmented Reality for Public Engagement) framework, we developed an interactive interface design prototype that enables users to browse and select data within an Augmented Reality (AR) environment using a virtual cube object that can be interacted with through 3D gestural input. The cube can be touched and rotated horizontally and vertically, allowing the user to explore data projected on the cube's faces along two conceptual dimensions. Additional hand postures and gestures can be performed to interact directly with the data on the cube's front face. The PEAR system is data-agnostic and able to use data from different sources and contexts. The prototype is implemented in Unity and uses additionally a novel hand posture and gesture recognition SDK that enables 3D gestural input on an ordinary off-the-shelf smartphone device without the need for additional hardware. The server and database responsible for handling the data that is projected on the prototype's cube faces are based on Node.js and MongoDB.

With Dr. Aris Alissandrakis

[ VINCI 2018 ]

[ ICPR 2016 ]

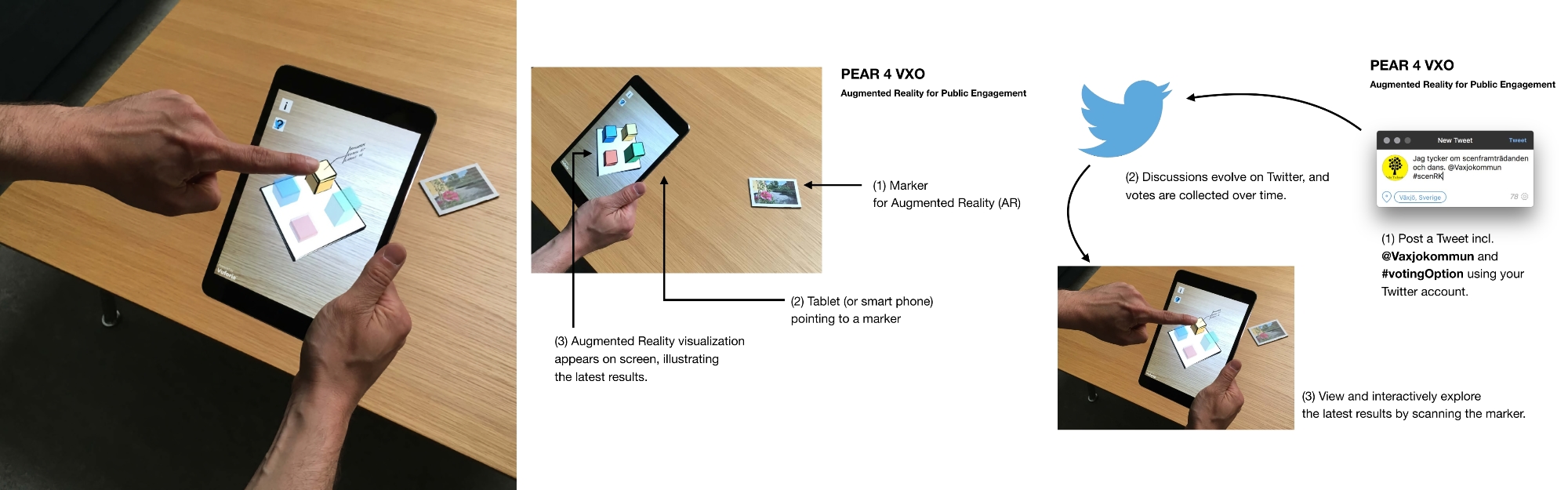

Augmented Reality for Public Engagement (PEAR)

(2016) The PEAR framework, short for Augmented Reality for Public Engagement, aims to allow the public to participate in discussions, or be informed about issues regarding particular geographical areas. People can go physically to a particular location, and use their mobile devices to explore on-site an Augmented Reality (AR) visualization. This AR visualization can represent results of an online voting process or data collected by various sensors, and is updated live as more people participate or new data are collected. Within this project, we intend to explore the use of AR and novel interaction techniques to facilitate public engagement.

PEAR is motivated through the objective to provide people with information and debate updates at the specific site to which the issue is related. People could also obtain such information online, but at both a geographical and a psychological distance. We hope that providing people with access to live information in-situ will encourage participation and engagement, and also allow them to reflect from a closer, more involved perspective.

A pilot study was conducted over from May to September 2016 in collaboration with Växjö municipality as PEAR 4 VXO, and was related to an ongoing public debate regarding the future development of the Ringsberg/Kristineberg area in Växjö. The public was invited to vote about this issue by using specific hashtags on Twitter (e.g. include #parkRK in a tweet to @vaxjokommun). People were also encouraged to visit the physical location, use their mobile devices to scan an information poster that was physically placed there, download our app, and explore (in Augmented Reality) a visualization that represents how many votes were cast for each option. The visualization was live and updated as long as people kept voting.

With Dr. Aris Alissandrakis

[ DH 2016 ]

[ LNU: en, sv ],

[ Växjö kommun: sv1, sv2 ],

[ PEAR 4 VXO Information Leaflet: en, sv ]

Virtual Reality / Immersive Interaction - M.Sc. Thesis

(2015) Based on the experiences from the early efforts of investigating Virtual Reality (VR) and immersive interaction, I continued in that direction and conducted my master thesis within that context. I investigated an approach to naturally interact and explore information based on open data within an immersive virtual reality environment using a head-mounted display and vision-based motion controls. For this purpose, I implemented a VR application that visualizes information from multiple different sources as a network of European capital cities, offering interaction through 3D gestural input. The application focuses on the exploration of the generated network and the consumption of the displayed information. I conducted a user interaction study with eleven participants to investigate aspects such as the acceptance, the estimated workload, and the explorative behavior when using the VR application. Additionally, explorative discussions with five experts provided further feedback towards the prototype's design and concept. The results indicate the participants’ enthusiasm and excitement towards the novelty and intuitiveness of exploring information in such a less traditional way, while challenging them with the applied interface and interaction design in a positive manner. The design and concept were also accepted through the experts, who valued the idea and implementation. The experts provided constructive feedback towards the visualization of the information, and made encouraging suggestions to be even bolder in regard to the design, making even more usage of the available 3D environment. The thesis discusses these findings and proposes recommendations for future work.

With Dr. Aris Alissandrakis

[ ACHI 2016 ]

[ M.Sc. Thesis 2015 ]

Virtual Reality / Immersive Interaction - Initial Efforts

(2014 - 2015) Virtual Reality (VR) has come a long way and is finally here to stay. A lot of research has been conducted in the area of virtual environments and immersive interaction. Applying both outside the context of games and entertainment is not a trivial task and needs further exploration, especially towards modern, fast evolving Internet phenomena, such as open data or multi-variate data. With the Oculus Rift DK2, a head-mounted display (HMD), and the Leap Motion, a vision-based motion controller to recognize the user's hand gestures, technologies that have the potential to create immersive VR applications are available to a broad audience at comparatively low costs.

As part of the master level courses Advanced Topics in Media Technology and Adaptive and Semantic Web at Linnaeus University, I conducted fundamental background research and explored interface design approaches to visualize content related to social networks within an immersive VR environment. After investigating the current state of the art in regard to VR and vision-based motion controls, I conducted a literature survey to gather insights about design approaches and interaction guidelines for the creation of an immersive VR prototype. Deriving conceptual and technical design aspects, I implemented a prototype using the Oculus Rift DK2, the Leap Motion controller, and the Unity game development engine. With the developed prototype up and running, I conducted a user interaction study to gain real life experiences and further initial insights. The study focused on the participants’ perception of the presented content within VR and the vision-based motion controls in order to interact with the prototype.

With Dr. Aris Alissandrakis

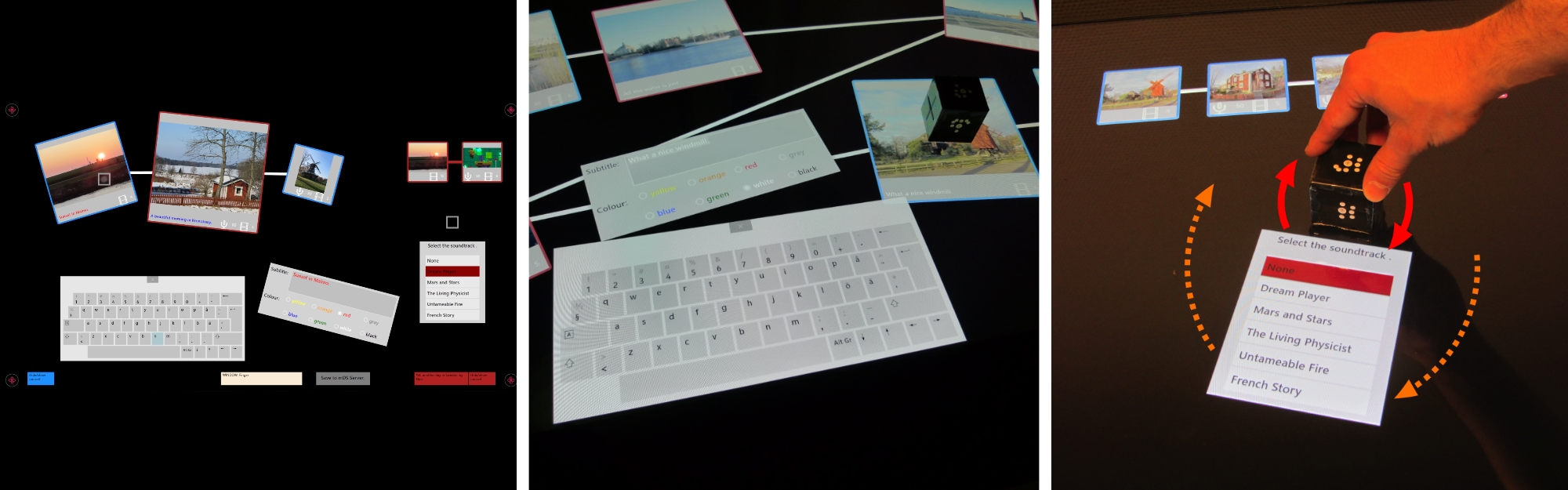

Collaborative mobile Digital Storytelling

(2013 - 2014) The application of Digital Storytelling in learning contexts is a relatively new field of research within Technology-Enhanced Learning. While mobile Digital Storytelling and thus the use of mobile multi-touch devices offer great single user experiences, there are some limitations concerning the actual physical collaboration using these devices, mainly due to the relatively small display size. Larger multi-touch screens, for instance interactive tabletops, hold the potential to overcome such space limitations and provide opportunities for a comfortable co-located collaboration. The combination of mobile digital stories generated by learners together with interactive tabletops provides an innovative area for co-located collaboration and co-creation.

In my bachelor thesis I evaluated the usage of Natural User Interface (NUI) and Tangible User Interface (TUI) design principles within the context of interactive co-located collaboration in technology-enhanced learning activities. For this purpose, I implemented an interactive tabletop application for the Samsung SUR40 with Microsoft PixelSense, using a storyboard-like user interface design approach to support share and remix scenarios within the context of collaborative mobile Digital Storytelling.

With Susanna Nordmark, and Prof. Dr. Marcelo Milrad

[ ICALT 2014 ]

[ B.Sc. Thesis 2013 ]

JUXTALEARN

(2014) The JUXTALEARN project at Linnaeus University's research group CeLeKT aimed to explore interactive in-situ display applications to facilitate new ways of video-based activities around digital displays with the objective to support curiosity and engagement throughout learner communities. A developed public display video application presents contextual information as well as quizzes related to video content. Additionally, the application displays the results of the answered quizzes. The public displays and their displayed applications are located in schools, making them easily accessible to pupils and students. Those are provided with a mobile application in order to participate in the quizzes and to interact with the overall system.

As a software developer I was responsible for creating the dynamic quiz results visualization using web technologies such as the D3.js library for its implementation.

With Maximilian Müller, Alisa Sotenko, Dr. Aris Alissandrakis, Dr. Nuno Otero, and Prof. Dr. Marcelo Milrad

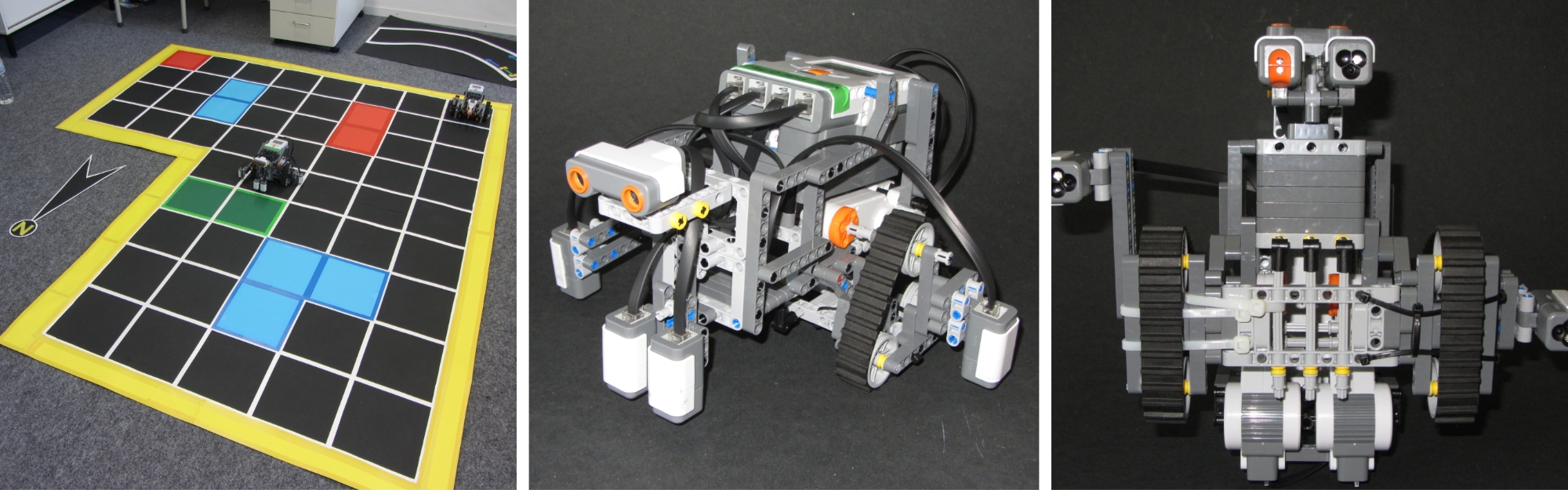

Robotics: Cartographer based on LEGO Mindstorms

(2012) Within a group project in the fifth term of my bachelor studies, we built a robot that is capable of mapping an unexplored area based on a tile grid. The project is to be considered a prototype for a territory-exploration robot on the one hand, while investigating the possibilities and constraints of the LEGO Mindstorms education set on the other.

The final concept and prototype were a mapping robot that sends explored data using Bluetooth 2.0 to a tablet computer running a corresponding Android application. The app realizes several tasks, such as storing the received data, visualizing the data, displaying the current position of the robot in the area, simulating the robots AI by evaluating the most efficient next steps in order to explore the area, and providing the stored data to other robot units using a public interface.

With Kerstin Günther, Thomas Zwerg, Robert Oehler, Stefan Rulewitz, José Gonzalez, and Ludwig Dohrmann

[ IMI Showtime Summer 2022 ]